【K8S之调度器流程和扩展】如何给 scheduler 添加扩展插件、关闭默认插件、创建多个 scheduler?_kubernetes scheduler 插件-CSDN博客

K8s 调度框架设计与 scheduler plugins 开发部署示例(2024) (arthurchiao.art)

A.实现的例子https://blog.wubw.fun/2021/12/build-kubernetes-scheduler-framework-plugin.html

B.这个比较新可以考虑https://github.com/rossgray/custom-k8s-scheduler/tree/main

部署可以参考这个配置多个调度器 | Kubernetes

参考文献

http://cjc.ict.ac.cn/online/bfpub/wad-2023316104301.pdf

云计算研究综述及未来发展 - 中国知网 (cnki.net)

TODO √已经成功部署,在这个的基础上写自己的

构建docker镜像使用deployment进行部署

可以改进:使用map储存podName

使用docker镜像 制作镜像 Dockerfile

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 FROM golang:1.19 AS builderWORKDIR /app COPY . . ENV GOPROXY=https://goproxy.cnRUN go get sample-scheduler RUN go build -o sample-scheduler . RUN chmod +x sample-scheduler CMD ["ls" ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 FROM golang:1.19 AS builderWORKDIR /app COPY . . ENV GOPROXY=https://goproxy.cnRUN go mod download RUN go get sample-scheduler RUN go build -o sample-scheduler . FROM alpine:latestRUN mkdir /app COPY --from=builder /app/sample-scheduler /app/sample-scheduler COPY --from=builder /app/scheduler.conf /app/scheduler.conf RUN chmod +x /app/sample-scheduler ENTRYPOINT ["/app/sample-scheduler" ] CMD ["--authentication-kubeconfig=scheduler.conf" , "--authorization-kubeconfig=scheduler.conf" , "--config=scheduler-config.yaml" , "--secure-port=10260" ]

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 "ls" ]"ls" ]"./sample-scheduler --authentication-kubeconfig=scheduler.conf --authorization-kubeconfig=scheduler.conf --config=scheduler-config.yaml --secure-port=10260" ]

构建镜像

1 sudo docker build -t my_scheduler:3.0 .

上传阿里云镜像仓库

1 2 3 4 sudo docker login --username=aliyun7797214054 registry.cn-hangzhou.aliyuncs.com

拉取镜像

1 docker pull registry.cn-hangzhou.aliyuncs.com/szy_is_me/my_scheduler:[镜像版本号]

运行容器

1 docker run -p 10260:10260 registry.cn-hangzhou.aliyuncs.com/szy_is_me/my_scheduler:[镜像版本号]

编译的时候在win上编译liunx

1 2 3 4 5 CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build sample-scheduler.go

ozota1/k8s-scheduling-framework-small-sample: small sample implementation of kubernetes scheduling-framework (github.com)

1 2 3 4 5 6 7 sudo docker tag my_cluster_scheduler acdream666 /my_scheduler_c:1 .1 sudo docker push acdream666 /my_scheduler_c:1 .0 docker pull acdream666 /my_scheduler_c:1 .0 docker run -p 10260 :10260 registry.cn-hangzhou.aliyuncs.com/szy_is_me/my_cluster_scheduler:1 .0 docker run -p 10260 :10260 my_cluster_scheduler:1 .1

1.1 可以运行

调度器拓展思路 QueueSort 默认提供了优先级但是,默认的优先级需要创建priorityClass类才能使用,这里可以考虑增加一个字段直接使用。

在 Pod YAML 中定义重要度的字段名可以通过 Annotations 字段来实现。Annotations 是一个用于存储额外信息的字段,可以在 Pod 的 YAML 文件中以键值对的形式定义。你可以为 Pod 定义一个自定义的 Annotation,用来表示其重要度。以下是一个示例 Pod YAML 文件,其中定义了一个名为 importance 的 Annotation:

1 2 3 4 5 6 7 8 9 10 apiVersion: v1 kind: Pod metadata: name: my-pod annotations: importance: "5" spec: containers: - name: my-container image: nginx

在这个示例中,metadata.annotations.importance 字段指定了 Pod 的重要度,其值为 5。你可以根据实际需求将不同的重要度值分配给不同的 Pod,以便在调度时根据重要度进行排序。

Filter 可以过滤适合的硬件,没有对应硬件的node直接过滤掉

Score 这里可以用来实现固定宿主机

1 Error response from daemon: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process : exec: "/usr/local/bin/kube-scheduler" : stat /usr/local /bin/kube-scheduler: no such file or directory : unknown

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 apiVersion: apps/v1 kind: Deployment metadata: labels: app: hello-minikube-1 name: hello-minikube-1 namespace: default spec: replicas: 2 selector: matchLabels: app: hello-minikube-1 template: metadata: labels: app: hello-minikube-1 nodeGroup: "1" spec: containers: - image: kicbase/echo-server:1.0 imagePullPolicy: IfNotPresent name: echo-server restartPolicy: Always schedulerName: custom-scheduler --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: hello-minikube-2 name: hello-minikube-2 namespace: default spec: replicas: 2 selector: matchLabels: app: hello-minikube-2 template: metadata: labels: app: hello-minikube-2 nodeGroup: "2" spec: containers: - image: kicbase/echo-server:1.0 imagePullPolicy: IfNotPresent name: echo-server restartPolicy: Always schedulerName: custom-scheduler

安装minikube

将B部署到minikube

7ee438bac860

docker tag 7ee438bac860 registry.cn-hangzhou.aliyuncs.com/szy_is_me/custom-k8s-scheduler_test:1.0

registry.cn-hangzhou.aliyuncs.com/szy_is_me/custom-k8s-scheduler_test:1.0

kubectl describe po custom-scheduler-f88b6bcbb-7rsfz –namespace=kube-system

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 apiVersion: v1 kind: ServiceAccount metadata: name: my-scheduler namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: my-scheduler-as-kube-scheduler subjects: - kind: ServiceAccount name: my-scheduler namespace: kube-system roleRef: kind: ClusterRole name: system:kube-scheduler apiGroup: rbac.authorization.k8s.io --- apiVersion: v1 kind: ConfigMap metadata: name: my-scheduler-config namespace: kube-system data: my-scheduler-config.yaml: | apiVersion: kubescheduler.config.k8s.io/v1beta2 kind: KubeSchedulerConfiguration profiles: - schedulerName: my-scheduler leaderElection: leaderElect: false --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: my-scheduler-as-volume-scheduler subjects: - kind: ServiceAccount name: my-scheduler namespace: kube-system roleRef: kind: ClusterRole name: system:volume-scheduler apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: Deployment metadata: labels: component: scheduler tier: control-plane name: my-scheduler namespace: kube-system spec: selector: matchLabels: component: scheduler tier: control-plane replicas: 1 template: metadata: labels: component: scheduler tier: control-plane version: second spec: serviceAccountName: my-scheduler containers: - command: - /usr/local/bin/kube-scheduler - --config=/etc/kubernetes/my-scheduler/my-scheduler-config.yaml image: registry.cn-hangzhou.aliyuncs.com/szy_is_me/custom-k8s-scheduler_test:1.0 livenessProbe: httpGet: path: /healthz port: 10259 scheme: HTTPS initialDelaySeconds: 15 name: kube-second-scheduler readinessProbe: httpGet: path: /healthz port: 10259 scheme: HTTPS resources: requests: cpu: '0.1' securityContext: privileged: false volumeMounts: - name: config-volume mountPath: /etc/kubernetes/my-scheduler hostNetwork: false hostPID: false volumes: - name: config-volume configMap: name: my-scheduler-config

将B部署到minikube 1 2 3 minikube start --force --nodes 3 -p custom-scheduler-demo addons enable registry -p custom-scheduler-demo

创建项目 1 go env -w GOPROXY=https: //goproxy.cn ,direct

调度器的目录结构 1 2 3 4 5 6 7 8 9 10 custom-scheduler/

在这个目录结构中:

cmd/custom-scheduler/main.go:是调度器程序的入口文件,包含调度器的启动逻辑。pkg/scheduler/:是自定义调度器的实现目录,包含调度器的代码文件。您可以根据需要在这个目录下创建更多的子目录来组织调度器的代码。go.mod:是 Go 模块文件,用于管理项目的依赖。README.md:是项目的文档,通常包含项目的介绍、使用方法和贡献指南等信息。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 import ("math/rand" type CustomScheduler struct {func (cs *CustomScheduler) ScoreExtensions () framework .ScoreExtensions return csfunc (cs *CustomScheduler) Score (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) (int , error) 10 ) + 1 return score, nil func (cs *CustomScheduler) NormalizeScore (ctx context.Context, state *framework.CycleState, pod *v1.Pod, scores framework.ScoreList)

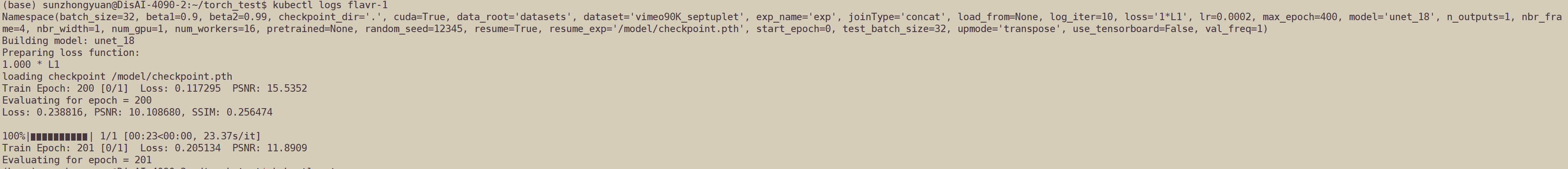

实验结果测试 使用FLAVR在海光gpu上运行,并进行断点续训。

运行 Dockerfile dockerfile使用方法,这里注意数据卷的挂载

1 2 3 docker build --no-cache -t flavr:latest .ulimit stack=-1:-1 --ulimit memlock=-1:-1 flavr:latestexec -it flavr /bin/bash

单机单卡 1 python main.py --batch_size 32 --test_batch_size 32 --dataset vimeo90K_septuplet --loss 1*L1 --max_epoch 200 --lr 0.0002 --data_root datasets --n_outputs 1 --num_gpu 1 --resume --checkpoint_dir . --resume_exp /model/checkpoint.pth --log_iter 10

断点续训 原有的代码没有实现断点续训,在mian中添加后实现

1 2 3 4 5 if args.resume:if os.path.exists(args.resume_exp):else :print ('No checkpoint' )

GPT 框架 当您尝试自定义 Kubernetes 调度器时,以下是一个简化的示例,以解释如何通过调度器框架扩展来实现自定义调度器的过程。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 package mainimport ("fmt" "context" "k8s.io/api/core/v1" "k8s.io/apimachinery/pkg/api/resource" "k8s.io/apimachinery/pkg/api/meta" "k8s.io/apimachinery/pkg/api/errors" "k8s.io/apimachinery/pkg/runtime" "k8s.io/apimachinery/pkg/util/intstr" "k8s.io/apimachinery/pkg/scheduler/framework" "k8s.io/client-go/、/record" type CustomScheduler struct {func (cs *CustomScheduler) ScoreExtensions () framework .ScoreExtensions return nil func (cs *CustomScheduler) NormalizeScore (ctx context.Context, state *framework.CycleState, pod *v1.Pod, scores framework.NodeScoreList) func (cs *CustomScheduler) Permit (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) func (cs *CustomScheduler) PreFilter (ctx context.Context, state *framework.CycleState, pod *v1.Pod) func (cs *CustomScheduler) PreFilterExtensions () framework .PreFilterExtensions return nil func (cs *CustomScheduler) PreFilterExtensions () framework .PreFilterExtensions return nil func (cs *CustomScheduler) FilterExtensions () framework .FilterExtensions return nil func (cs *CustomScheduler) Reserve (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) func (cs *CustomScheduler) Unreserve (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) func (cs *CustomScheduler) Permit (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) func NewCustomScheduler (handle framework.FrameworkHandle) framework .NextFilter return &CustomScheduler{func main ()

以上代码是一个简化的 Go 示例,演示了如何创建一个自定义调度器,并通过调度器框架扩展器来实现对调度过程的扩展和定制。在实际项目中,您需要根据自己的需求和场景进行更复杂的实现和逻辑处理。希望这个示例能够帮助您更好地理解如何通过扩展点实现自定义调度器。

请完善这个例子,比如让它实现随机调度pod到node上 为了让这个示例实现随机调度 Pod 到节点,您可以在 CustomScheduler 结构中实现 ScoreExtensions 和 NormalizeScore 方法来实现随机评分和规范化评分值的功能。下面是对示例代码的补充,通过引入随机评分的方式实现随机调度:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 import ("math/rand" type CustomScheduler struct {func (cs *CustomScheduler) ScoreExtensions () framework .ScoreExtensions return csfunc (cs *CustomScheduler) Score (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) (int , error) 10 ) + 1 return score, nil func (cs *CustomScheduler) NormalizeScore (ctx context.Context, state *framework.CycleState, pod *v1.Pod, scores framework.ScoreList)

在上述代码中,我们在 CustomScheduler 结构中引入了随机数生成器,并在 Score 方法中使用随机生成的评分值来实现随机调度。当 Kubernetes 调度器调用这个自定义调度器的 Score 方法时,将根据随机生成的评分值来决定 Pod 调度的节点。

请注意,这仅是一个简化的示例,实际情况下可能需要更多的逻辑来处理节点资源、Pod需求、集群状态等。希望这个补充能够满足您的要求,如需进一步帮助或有其他问题,请随时告诉我。

我想将上面的调度器部署到我的kubernetes集群中,应该怎么做呢 当您尝试在 Kubernetes 集群中部署自定义调度器时,下面是每个步骤的具体操作方法:

构建自定义调度器二进制文件 :根据您的自定义调度器代码,确保您的环境中安装了正确的开发工具,包括 Go 编程语言环境。使用 go build 命令构建自定义调度器的二进制文件。

创建调度器的 Docker 镜像 :编写 Dockerfile,例如:

1 2 3 4 5 6 7 8 FROM golang:1.16 as builderWORKDIR /go/src/app COPY . . RUN go build -o custom-scheduler . FROM alpine:latestCOPY --from=builder /go/src/app/custom-scheduler /usr/local /bin/custom-scheduler CMD ["custom-scheduler" ]

然后,使用 docker build 命令构建 Docker 镜像:

1 docker build -t custom-scheduler:v1 .

将镜像推送到容器注册表 :使用 docker push 命令将构建好的镜像推送到您选择的容器注册表中,例如 Docker Hub 或私有容器注册表。

配置 Kubernetes 调度器 :找到 Kubernetes Master 节点上的调度器配置文件,通常是 /etc/kubernetes/manifests/kube-scheduler.yaml。编辑该文件中的容器配置,将镜像指向您的自定义调度器镜像及其配置。

重启调度器 Pod :使用命令重启调度器 Pod,例如:

1 kubectl delete pod -n kube-system [scheduler-pod-name]

验证自定义调度器 :在集群中创建测试 Pod,并使用 kubectl describe pod [pod-name] 命令来确认它们是否按照您的自定义调度器逻辑被调度到节点上。

以上是部署自定义调度器的具体操作方法。请根据您实际的环境和需求调整命令和配置,并确保在生产环境之前进行足够的测试。如需进一步的帮助或有其他问题,请随时向我询问。

通过拓展kubernetes调度框架扩展点拓展点的方式,实现一个简单的例子,并给出将其部署到kubernetes集群的具体步骤 Absolutely, let’s outline a simple example of extending Kubernetes scheduling framework and provide the steps to deploy it to a Kubernetes cluster:

Step 1: Developing the scheduler extension plugin

The plugin development basically involves creating a Go project and writing some code. Here’s a basic example of a plugin “PrioritySort” that sorts nodes based on a preset score:

当然,让我们概述一个扩展 Kubernetes 调度框架的简单示例,并提供将其部署到 Kubernetes 集群的步骤:

第 1 步:开发调度程序扩展插件

插件开发主要涉及创建一个Go项目并编写一些代码。 以下是插件“PrioritySort”的基本示例,该插件根据预设分数对节点进行排序:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 package mainimport ("context" "k8s.io/apimachinery/pkg/types" "k8s.io/kubernetes/pkg/scheduler/framework" type PrioritySort struct {}func (ps PrioritySort) Name () string return "PrioritySort" func (ps PrioritySort) Score (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) (int64 , *framework.Status) return 1 , nil func (ps PrioritySort) ScoreExtensions () framework .ScoreExtensions return psfunc (ps PrioritySort) NormalizeScore (ctx context.Context, state *framework.CycleState, pod *v1.Pod, scores framework.NodeScoreList) *framework .Status return nil var _ framework.ScorePlugin = &PrioritySort{}

Step 2: Building the plugin into a .so file

You can use the “go build” command to build the Go file into a .so file:

第 2 步:将插件构建到 .so 文件中

您可以使用“go build”命令将Go文件构建为.so文件:

1 go build -buildmode=plugin -o ./prioritysort.so ./priority_sort.go

Step 3: Deploying the extension to a Kubernetes cluster

Next, you need to configure the Kubernetes scheduler to use this scoring plugin. This involves creating a .yaml file and applying it to the cluster:

步骤 3:将扩展部署到 Kubernetes 集群

接下来,您需要配置 Kubernetes 调度程序以使用此评分插件。 这涉及创建 .yaml 文件并将其应用到集群:

1 2 3 4 5 6 7 8 apiVersion: kubescheduler.config.k8s.io/v1beta1 kind: KubeSchedulerConfiguration profiles: - plugins: score: enabled: - name: "PrioritySort" ...

You apply this to the cluster with the “kubectl apply” command:

您可以使用“kubectl apply”命令将其应用到集群:

1 kubectl apply -f ./my-kube-scheduler-config.yaml

注意:这是一个简单且非常基本的示例。 编写实际的调度程序插件可能需要深入了解 Kubernetes API 和调度过程。 在将代码部署到生产环境之前,您还需要确保代码安全可靠。

Note: This is a simple and very basic example. Writing actual scheduler plugins might require a deep understanding of the Kubernetes API and the scheduling process. You also need to ensure that your code is safe and robust before deploying it in a production environment.

一个kubernetes的自定义调度器工程的目录结构应该是什么样子的 A custom Kubernetes scheduler project’s directory structure might look something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 .go .mod go .sum go go go go go go go go go

Here’s a short explanation of what the directory and file would be:

main.go: the entry point of the project.pkg: usually contains the main implementation code of the project.apis: includes definitions of your custom resources.client: generated client code to make it easier to write the controller logic.controller: will contain the controller’s logic.scheduler: The core logic for your custom scheduler would reside here.go.mod and go.sum: represent the Go’s project module information including dependencies.README.md: introduces the project and explains how to use it.

Please note, this directory structure isn’t written in stone and can certainly be adapted to suit the specific needs of the project or the team’s preferences.

基于此,如何实现如果一个pod是第一次调度就让默认调度器去调度,如果不是第一次调度就调度到第一次调度的节点上 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 package mainimport ("context" "os" "sync" "k8s.io/api/core/v1" "k8s.io/apimachinery/pkg/runtime" "k8s.io/component-base/logs" "k8s.io/klog/v2" "k8s.io/kubernetes/cmd/kube-scheduler/app" "k8s.io/kubernetes/pkg/scheduler/framework" type SampleScheduler struct {map [string ]string const Name = "SampleScheduler" func (pl *SampleScheduler) Name () string return Namefunc (cs *SampleScheduler) PreFilter (ctx context.Context, state *framework.CycleState, pod *v1.Pod) *framework .Status "pre filter called for pod %v" , pod.Name)defer cs.lock.RUnlock()if _, ok := cs.podFirstScheduledNode[pod.Name]; !ok {return framework.NewStatus(framework.Success, "" )return framework.NewStatus(framework.Unschedulable, "Pod has been scheduled before" )func (cs *SampleScheduler) PreFilterExtensions () framework .PreFilterExtensions return nil func (cs *SampleScheduler) PostBind (ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeName string ) "pod %v is binded to %v" , pod.Name, nodeName)defer cs.lock.Unlock()if _, ok := cs.podFirstScheduledNode[pod.Name]; !ok {func New (_ runtime.Object, _ framework.Handle) (framework.Plugin, error) return &SampleScheduler{make (map [string ]string ),nil func main () defer logs.FlushLogs()if err := command.Execute(); err != nil {1 )