主要参考k8s RoCE 部署: k8s-rdma-shared-dev-plugin + macvlan cni_rdma shared dev plugin-CSDN博客 这里记录一下遇到的问题和不同的部分

Multi-Network CRD Multi-Network CRD配置文件中的master应该设置的是部署了RDMA device plugin的节点的rdma网卡,这里因为232

RDMA device plugin can then be deployed on nodes with feature.node.kubernetes.io/custom-rdma.available=true, which indicates that the node is RDMA capable and RDMA modules are loaded.

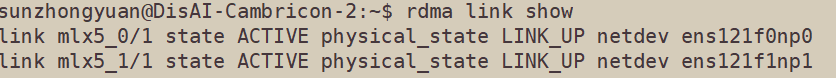

问题在于232没有feature.node.kubernetes.io/custom-rdma.available=true标签,导致RDMA device plugin无法在232上部署。这里选择了寒武纪2,将macvlan_cx5_bond.yaml中的master改为ens121f0np0即可

2024.6.21:

master (string, optional): name of the host interface to enslave. Defaults to default route interface.

参考CNI ,这里master其实可以不写,会自动选择。

因为出现了一些pod无法正常运行,删除了master

删除了master,测海光的的虚拟化rdma时出现了问题,rdma不通。将master改为海光的网卡就好了(原因暂时未知)

k8s-rdma-shared-dev-plugin-config-map.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 apiVersion: v1 kind: ConfigMap metadata: name: rdma-devices namespace: kube-system data: config.json: | { "periodicUpdateInterval": 300, "configList": [{ "resourceName": "cx5_bond_shared_devices_a", "rdmaHcaMax": 1000, "selectors": { "vendors": ["15b3"], "deviceIDs": ["1017","1019"] } }, { "resourceName": "cx6dx_shared_devices_b", "rdmaHcaMax": 500, "selectors": { "vendors": ["15b3"], "deviceIDs": ["101d"] } } ] }

这里vendors使用cat /sys/class/net/ens61f0np0/device/vendor获得

deviceIDs使用cat /sys/class/infiniband/mlx5_0/device/device获得

PS:这里ens61f0np0和mlx5_0要换成自己的

启用pod测试 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 apiVersion: v1 kind: Pod metadata: name: mofed-test-cx5-bond-pod1 annotations: k8s.v1.cni.cncf.io/networks: default/macvlan-cx5-bond-conf spec: restartPolicy: OnFailure containers: - image: mellanox/rping-test name: mofed-test-ctr securityContext: capabilities: add: [ "IPC_LOCK" ]resources: limits: rdma/cx5_bond_shared_devices_a: 1 requests: rdma/cx5_bond_shared_devices_a: 1 command: - sh - -c - | ls -l /dev/infiniband /sys/class/infiniband /sys/class/net sleep 1000000 pod/mofed-test-cx5-bond-pod1 created apiVersion: v1 kind: Pod metadata: name: mofed-test-cx5-bond-pod2 annotations: k8s.v1.cni.cncf.io/networks: default/macvlan-cx5-bond-conf spec: restartPolicy: OnFailure containers: - image: mellanox/rping-test name: mofed-test-ctr securityContext: capabilities: add: [ "IPC_LOCK" ]resources: limits: rdma/cx5_bond_shared_devices_a: 1 requests: rdma/cx5_bond_shared_devices_a: 1 command: - sh - -c - | ls -l /dev/infiniband /sys/class/infiniband /sys/class/net sleep 1000000 pod/mofed-test-cx5-bond-pod2 created

进入mofed-test-cx5-bond-pod1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 (base) sunzhongyuan@DisAI-4090-2:~/rdma/rdma_share$ kubectl exec -it mofed-test-cx5-bond-pod1 bashexec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.for client to connect... *

这里的mlx5_0可以使用ibv_devinfo -v查看

进入mofed-test-cx5-bond-pod2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 (base) sunzhongyuan@DisAI-4090-2:~$ kubectl exec -it mofed-test-cx5-bond-pod2 bash kubectl exec [POD ] [COMMAND ] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD ] -- [COMMAND ] instead. root@mofed-test-cx5-bond-pod2 / ]eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 172.16 .1 .56 netmask 255.255 .255 .0 broadcast 172.16 .1 .255 inet6 fe80::5cb3:2cff:fe9a:9ca4 prefixlen 64 scopeid 0x20 <link> ether 5e:b3:2c:9a:9c:a4 txqueuelen 0 (Ethernet) RX packets 23 bytes 1786 (1.7 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 13 bytes 962 (962.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0 .0 .1 netmask 255.0 .0 .0 inet6 ::1 prefixlen 128 scopeid 0x10 <host> loop txqueuelen 1000 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 net1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.56 .217 .72 netmask 255.255 .255 .0 broadcast 10.56 .217 .255 inet6 fe80::48c3:ebff:fe82:6f6f prefixlen 64 scopeid 0x20 <link> ether 4a:c3:eb:82:6f:6f txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 16 bytes 1228 (1.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 root@mofed-test-cx5-bond-pod2 / ]--------------------------------------------------------------------------------------- RDMA_Write BW Test Dual-port : OFF Device : mlx5_0 Number of qps : 1 Transport type : IB Connection type : RC Using SRQ : OFF TX depth : 128 CQ Moderation : 100 Mtu : 1024 [B ]Link type : Ethernet GID index : 4 Max inline data : 0 [B ]rdma_cm QPs : OFF Data ex. method : Ethernet --------------------------------------------------------------------------------------- local address: LID 0000 QPN 0x0064 PSN 0x5e7214 RKey 0x1825e4 VAddr 0x007f6f71de4000 GID: 00 :00:00:00:00:00:00:00:00:00:255:255:10:56:217:72 remote address: LID 0000 QPN 0x0047 PSN 0x3aa2bf RKey 0x1815d6 VAddr 0x007f8feae8c000 GID: 00 :00:00:00:00:00:00:00:00:00:255:255:10:56:217:74 --------------------------------------------------------------------------------------- 65536 5000 90.18 90.15 0.171953 ---------------------------------------------------------------------------------------

这里的ip对应的是pod1中net1的ip

参考

k8s RoCE 部署: k8s-rdma-shared-dev-plugin + macvlan cni_rdma shared dev plugin-CSDN博客

RoCe on K8s 实践 - 朱亚光的博客 (zhuyaguang.github.io)